Disk caching is a key technique used in computing to enhance the performance of hard disk drives (HDDs). As storage devices have evolved over the years, so have methods for optimizing their speed and efficiency. One such optimization technique is disk caching, which reduces the time taken to access data by storing frequently used information in a faster storage medium like RAM (Random Access Memory). Another aspect that can be integrated with disk caching is file compression, a process that reduces the size of files, enabling more efficient data storage and retrieval.

The relationship between disk caching and file compression is vital for performance improvement, especially in environments with large amounts of data. By combining these two techniques, users can experience better responsiveness and reduced latency in file access. This paper explores disk caching, its mechanics, the benefits of compressing files, and how the combination of both leads to improved hard disk performance.

What Is Disk Caching?

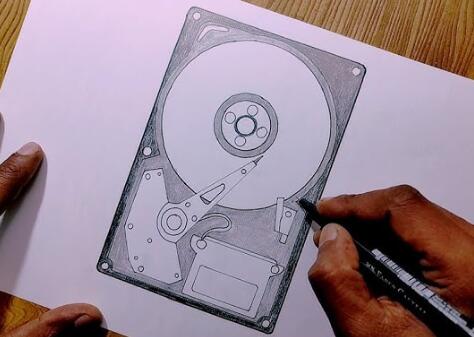

At its core, disk caching involves the use of a high-speed storage area (usually RAM or SSD) to store frequently accessed data. When a computer requests data from the hard disk, the operating system first checks whether the required data is available in the cache. If it is, the data can be retrieved much faster than if it were accessed directly from the slower hard disk. This process reduces the time it takes to load files, applications, and other system resources, which in turn boosts overall performance.

A typical disk cache system works on the principle of locality of reference. This concept is based on the observation that data access patterns exhibit locality: if a particular piece of data is accessed once, it’s likely that it will be accessed again in the near future. This leads to two types of locality:

Temporal locality, where recently accessed data is likely to be accessed again.

Spatial locality, where data close to the recently accessed data is likely to be needed soon.

Disk caching leverages these types of locality to decide which data should be stored in the cache. Once the cache is filled with useful data, subsequent requests are more likely to hit the cache, significantly speeding up access times.

The Mechanics of Disk Caching

The process of disk caching involves two main components:

Read cache: When data is read from the disk, it’s stored in the cache. If the same data is requested again, it’s delivered from the cache instead of the disk. This is especially beneficial when dealing with repetitive tasks or applications that use a large amount of static data, such as databases or word processors.

Write cache: In write caching, data that needs to be written to the disk is first placed in the cache. This allows the system to continue with other tasks while the actual writing to disk happens in the background. However, there is a risk of data loss if a system crash occurs before the cached data is written to the disk.

In modern operating systems, sophisticated algorithms are employed to manage cache size, evict old data, and ensure the most frequently used data is available in the cache. Common caching algorithms include:

Least Recently Used (LRU): The least recently used data is removed from the cache when new data is added.

First In, First Out (FIFO): The oldest data in the cache is removed first.

Adaptive Replacement Cache (ARC): A hybrid approach that adjusts based on access patterns, ensuring a balance between recency and frequency of data access.

File Compression: A Complement to Disk Caching

While disk caching focuses on optimizing the speed of data access, file compression targets storage efficiency by reducing the size of files. Compressed files take up less space, which not only increases the amount of data that can be stored but also reduces the time required to read or write data to disk.

Compression can be either lossless or lossy. In lossless compression, no data is lost, and the original file can be perfectly reconstructed from the compressed file. Common examples include ZIP files and PNG images. Lossy compression, on the other hand, results in some loss of data, which may be acceptable for applications like video and audio files (e.g., MP3 or JPEG formats).

The benefits of file compression for improving hard disk performance include:

Reduced Storage Requirements: Compressed files take up less space, allowing users to store more data on a hard disk. This reduces the need for frequent read/write operations as more data can be stored in a smaller footprint.

Faster Data Access: When combined with disk caching, file compression can speed up data access. Compressed files are smaller, which means they can be transferred more quickly to and from the cache.

Optimized Bandwidth Use: For systems that involve data transmission over networks, compressed files reduce the amount of data that needs to be sent, resulting in faster communication and less bandwidth usage.

Prolonged Hard Disk Life: By reducing the number of disk operations required to read or write data, file compression can help prolong the lifespan of a hard disk.

How Disk Caching and File Compression Work Together

Disk caching and file compression complement each other by improving both the speed and efficiency of hard disk operations. Here’s how they work together to boost performance:

More Efficient Cache Usage: Compressed files take up less space in the cache, which means that more data can be stored in the cache at any given time. This increases the likelihood that future data requests will hit the cache, thereby reducing the number of slow disk accesses.

Faster Reads and Writes: Since compressed files are smaller, they can be read from and written to the disk more quickly. When a read or write operation occurs, less data needs to be moved between the cache and the hard disk, leading to faster performance.

Improved I/O Operations: Input/output (I/O) operations are often a bottleneck in computing systems. File compression can reduce the amount of data involved in I/O operations, thus minimizing delays. Disk caching further enhances this by storing frequently accessed data in faster storage, reducing the need for repeated I/O operations.

Energy Efficiency: With less data being read from and written to the hard disk, the amount of energy required to power the disk is reduced. This is particularly beneficial for mobile devices and data centers, where power consumption is a critical concern.

Real-World Applications of Disk Caching and File Compression

In many computing environments, disk caching and file compression are used together to improve performance. Some real-world applications include:

Database Systems: Large databases often deal with massive amounts of data that are accessed frequently. By combining disk caching and file compression, database systems can reduce latency, improve query response times, and increase storage efficiency.

Web Servers: Websites often serve compressed files (e.g., images, CSS, and JavaScript) to users to reduce page load times. Disk caching on the server side can further speed up the delivery of these files, leading to a more responsive user experience.

Backup Systems: Backup software frequently uses file compression to reduce the size of backup files. Disk caching can be used to store recently accessed backups, allowing for quicker retrieval in case of a system restore.

Operating Systems: Modern operating systems often incorporate both disk caching and file compression as part of their performance optimization strategies. For example, Windows and macOS use caching to improve application launch times and general file access speed, while also offering compression utilities to free up storage space.

Challenges and Limitations

Despite the significant advantages, there are some challenges associated with the combined use of disk caching and file compression:

Increased CPU Usage: Compressing and decompressing files requires processing power, which may lead to increased CPU usage. In systems with limited resources, this can result in slower performance during compression operations.

Cache Eviction Policies: Inefficient cache management can lead to frequent cache misses, negating the performance benefits of disk caching. Choosing the right eviction policy and cache size is crucial for maximizing performance.

Decompression Overhead: When compressed files are read from the cache, they must be decompressed before use. This introduces additional overhead, which can offset some of the performance gains from faster data access.

Data Integrity Risks: In write caching, data is temporarily stored in the cache before being written to disk. If the system crashes before the data is written to the disk, there’s a risk of data loss. While modern systems implement techniques to mitigate this risk, it remains a concern in certain scenarios.

About us and this blog

Panda Assistant is built on the latest data recovery algorithms, ensuring that no file is too damaged, too lost, or too corrupted to be recovered.

Request a free quote

We believe that data recovery shouldn’t be a daunting task. That’s why we’ve designed Panda Assistant to be as easy to use as it is powerful. With a few clicks, you can initiate a scan, preview recoverable files, and restore your data all within a matter of minutes.